Hadoop Word Count - step by step execution

Step1: Open eclipse -> File -> New -> Others

Select Maven Project

The following picture is perfect and do the same

use the filer: org.apache.maven click next

Use Group Id and Artifact Id as the below pic, or you can write anything else

Well, Application project is created successfully, now write the program in step2:

Step2:

pom.xml is the important file, it contains the configuration files of the maven. add the hadoop dependency to the program by editing the pom.xml file.

Copy the following dependency code and use it in pom.xml file.

<dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-core</artifactId> <version>1.2.1</version> </dependency>

Step3:

Add new class file to the project as below

give the class name, here used wc and click finish.

Step4: The following is the basic Word Count program is used to find the words along with the repeated number.

Copy this code and use it in eclipse.

package npu.edu.hadoop;

import java.io.IOException; import java.util.StringTokenizer; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.Mapper; import org.apache.hadoop.mapreduce.Reducer; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; import org.apache.hadoop.util.GenericOptionsParser; public class WordCount { public static class TokenizerMapper extends Mapper<Object, Text, Text, IntWritable>{ private final static IntWritable one = new IntWritable(1); private Text word = new Text(); public void map(Object key, Text value, Context context ) throws IOException, InterruptedException { StringTokenizer itr = new StringTokenizer(value.toString()); while (itr.hasMoreTokens()) { word.set(itr.nextToken()); context.write(word, one); } } } public static class IntSumReducer extends Reducer<Text,IntWritable,Text,IntWritable> { private IntWritable result = new IntWritable(); public void reduce(Text key, Iterable<IntWritable> values, Context context ) throws IOException, InterruptedException { int sum = 0; for (IntWritable val : values) { sum += val.get(); } result.set(sum); context.write(key, result); } } public static void main(String[] args) throws Exception { Configuration conf = new Configuration(); String[] otherArgs = new GenericOptionsParser(conf, args).getRemainingArgs(); if (otherArgs.length != 2) { System.err.println("Usage: WordCount <in> <out>"); System.exit(2); } Job job = new Job(conf, "word count"); job.setJarByClass(WordCount.class); job.setMapperClass(TokenizerMapper.class); job.setCombinerClass(IntSumReducer.class); job.setReducerClass(IntSumReducer.class); job.setOutputKeyClass(Text.class); job.setOutputValueClass(IntWritable.class); FileInputFormat.addInputPath(job, new Path(otherArgs[0])); FileOutputFormat.setOutputPath(job, new Path(otherArgs[1])); System.exit(job.waitForCompletion(true) ? 0 : 1); } }

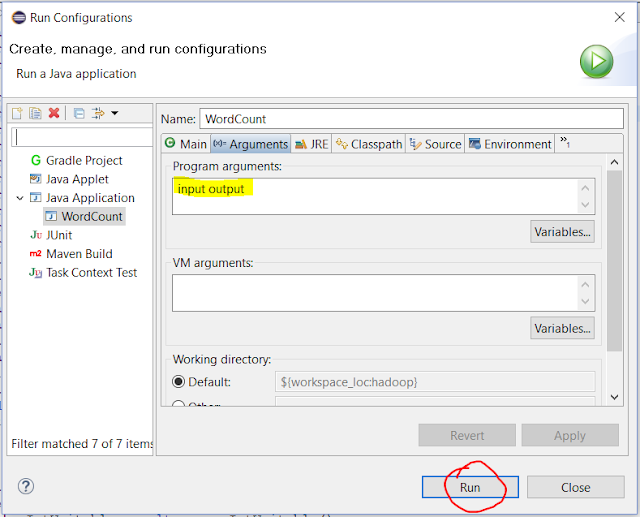

(Optional) Run -> Run configuration -> Arguments ->

add (input output)

Step5: Run the code and you'll get the error like this, because we use eclipse is only for writing the program, and remaing task is to be done with Hadoop.

Right click on the project select Run As -> Maven Install .. you'll get Build Success,

Build Failed? just try couple of times of Maven Install, otherwise clear errors.

You'll get a *.jar file comes with the Build Success, find it in Target Directory.

How to Run the program in Hadoop? Click Here

If you like my Material, Like and Share and Please subscribe my Youtube Channel.

Your blog is very informative. Eating mindfully has been very hard for people these days. It's all because of their busy schedules, work or lack of focus on themselves. As a student I must admit that I have not been eating mindfully but because of this I will start now. It could help me enjoy my food and time alone. Eating mindfully may help me be aware of healthy food and appreciating food. Duplicate word counter

ReplyDelete